On August 25,Underwater Love Alibaba Cloud launched an open-source Large Vision Language Model (LVLM) named Qwen-VL. The LVLM is based on Alibaba Cloud’s 7 billion parameter foundational language model Qwen-7B. In addition to capabilities such as image-text recognition, description, and question answering, Qwen-VL introduces new features including visual location recognition and image-text comprehension, the company said in a statement. These functions enable the model to identify locations in pictures and to provide users with guidance based on the information extracted from images, the firm added. The model can be applied in various scenarios including image and document-based question answering, image caption generation, and fine-grained visual recognition. Currently, both Qwen-VL and its visual AI assistant Qwen-VL-Chat are available for free and commercial use on Alibaba’s “Model as a Service” platform ModelScope. [Alibaba Cloud statement, in Chinese]

Related Articles

2025-06-26 09:49

831 views

NYT mini crossword answers for April 24, 2025

The Mini is a bite-sized version of The New York Times' revered daily crossword. While the crossword

Read More

2025-06-26 09:41

1867 views

On the Shelf by Sadie Stein

On the ShelfBy Sadie SteinSeptember 28, 2011BulletinH.G. WellsA cultural news roundup.Jewish poet an

Read More

2025-06-26 09:26

2106 views

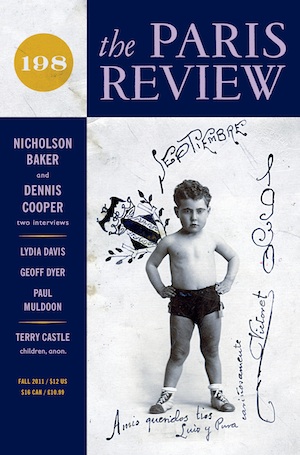

Talking Dirty with Our Fall Issue by Sadie Stein

Talking Dirty with Our Fall IssueBy Sadie SteinSeptember 6, 2011BulletinIt avails not, neither earth

Read More